SSO Guide (Deprecated)

Deprecated. See OAuth2 guides

How to configure SSO

Step 1

mkdir cert

keytool -genkeypair -alias ui-for-apache-kafka -keyalg RSA -keysize 2048 \

-storetype PKCS12 -keystore cert/ui-for-apache-kafka.p12 -validity 3650Step 2

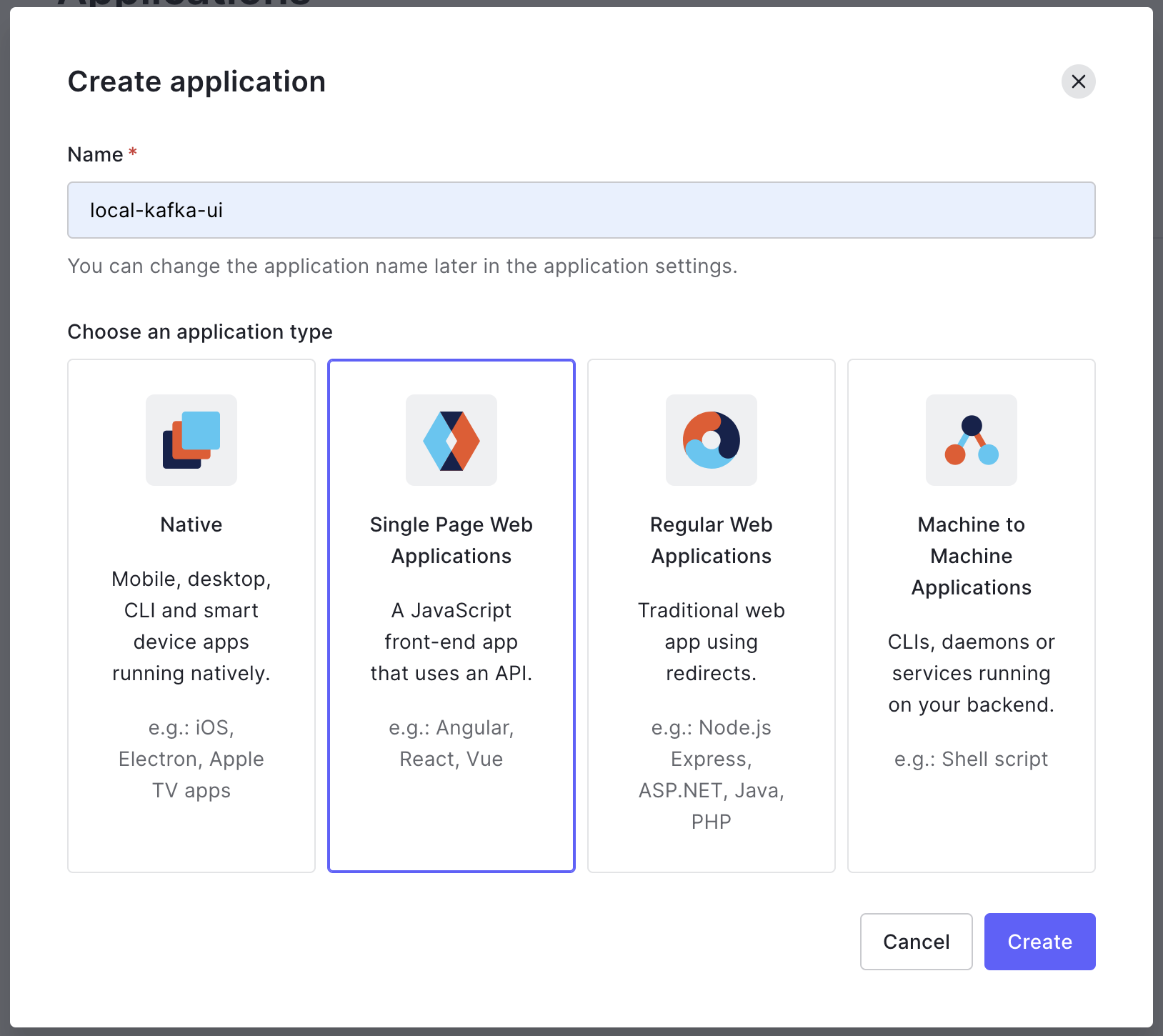

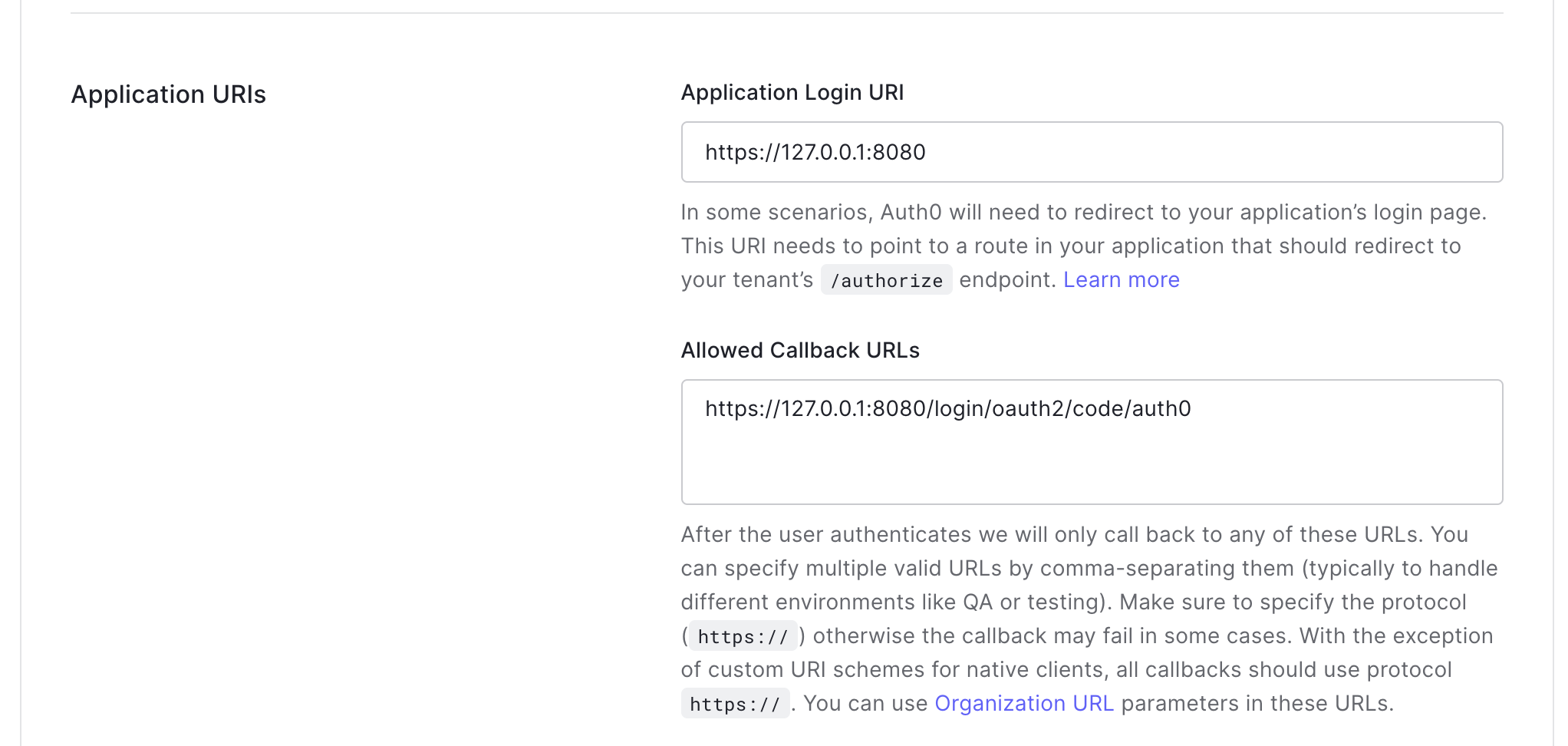

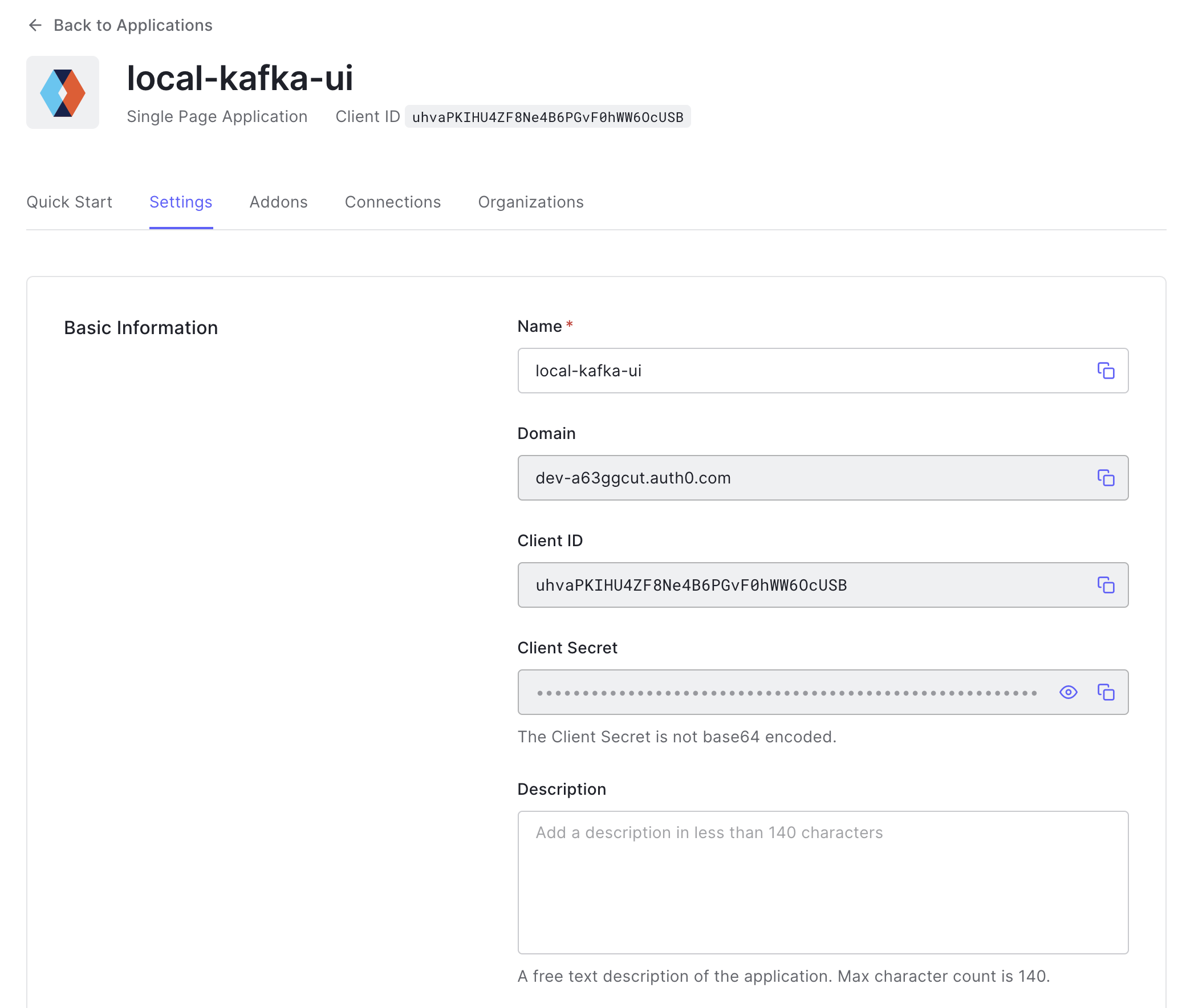

Step 3

Step 4 (Load Balancer HTTP) (optional)

Step 5 (Azure) (optional)

Last updated

Was this helpful?